Microsoft unveils Xbox content filters to stop the swears and toxicity – The Verge

Microsoft is starting to test new content filters for its Xbox Live messaging system today in an effort to thwart toxicity on its platform. Microsoft has handled moderation on Xbox Live for almost 20 years, including the ability to report messages, Gamertags, photos, and much more, but this new effort places the player in control of what they will see on Xbox Live. At first, Microsoft is rolling out text-based filters for messages on Xbox Live, but the company has a much broader goal of being able to filter out Xbox Live party sessions in the future so live audio calls could be filtered with real-time bleeps similar to broadcast TV.

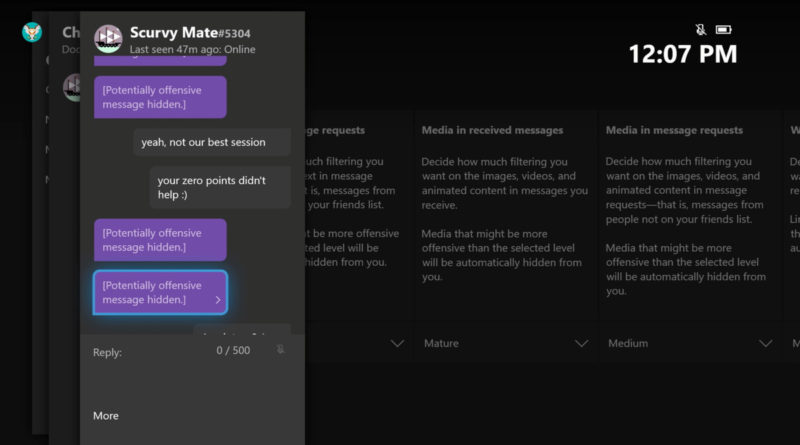

There will be four new levels of text-based filtration available to Xbox Live users initially: Friendly, Medium, Mature, and Unfiltered. As you might imagine, Friendly is the most child-friendly option, designed to filter out all potentially offensive messages. At the opposite end, there’s Unfiltered, which will work as Xbox Live messaging mostly works today.

Filtered messages will appear with a new “potentially offensive hidden message” warning on them, and if you’re using an adult Xbox Live account, you’ll be able to click through to see the content and report it using the regular tools if need be. Child accounts will be placed into the Friendly level by default, and they’ll be blocked by default from seeing potentially offensive messages. Parents will be able to manage the levels through Microsoft’s family settings, and the filtering will work across Xbox One, Xbox Game Bar, and the Xbox apps for Windows 10, iOS, and Android.

For Microsoft, this isn’t just about filtering out the bad stuff people might send to you while you’re playing the latest Call of Duty title. It goes much deeper. “You see stories of African American players being called out for lynching in multiplayer sessions, or female gamers in competitive environments being called all sorts of names and feeling harassed in the outside world, or members of our LGBTQ community feeling like they can’t speak with their voice on Xbox Live for fear that they’ll be called out,” explains Dave McCarthy, head of Microsoft’s Xbox operations, in an interview with The Verge. “If we really are to realize our potential as an industry and have this wonderful medium come to everybody, there’s just no place for that.”

McCarthy and his team have been looking at various ways to identify the context of messages using a mix of artificial intelligence and filtering. “Context is a really tricky thing in the gaming space,” admits McCarthy. “It’s one thing to say you’re going to go on a killing spree when you’re getting ready for a multiplayer mission in Halo, and it’s another when that’s uttered in another setting. Finding ways for us to understand context and nuance is a never-ending battle.”

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19285103/1wKBN51.png)

I spent part of my meeting with McCarthy and his team in one of the more bizarre moments of my career: bombarding an Xbox Live account with rude messages in an attempt to beat the filters. I tried some of the basic ways to get around typical text filters by manipulating swear words with accents or symbols, and Microsoft caught them. Even context-based messages about murdering waves of zombies were picked up correctly. I didn’t have enough time to test the system fully, but nothing is foolproof, and the internet can quickly adapt to create offensive phrases and memes that attack certain communities. Microsoft demonstrated how it could take a particular phrase and instantly add it to the company’s filtering system, although someone would still need to flag this word in the first place.

Microsoft is now trying to be more open and transparent about how it moderates Xbox Live and the choices it makes to enforce these filters across the community. “If we’re going to ask our users to be accountable for their actions on Xbox Live and our other services, we need to be transparent about our value system, our practices, and why we do what we do,” explains McCarthy. “We’ve had success recently in doing things like providing evidence, and pointing people back to our new Xbox Live community standards, as part of our transparency effort around investigations we do.”

At present, Xbox Live users can dig into the company’s enforcement website to ultimately find hints about what specific message got their account into trouble. But it’s not the same as highlighting the bad behavior in a message to the person responsible for the account. Microsoft is considering some changes to how Xbox Live owners are notified when they break the rules, but the company is also looking far beyond just text-based messages.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/19285104/YbonrPA.png)

Microsoft also wants to tackle the challenge of voice chat toxicity on Xbox Live. A lot of toxic behavior and harassment can occur when someone is invited into an Xbox Live party chat. Microsoft Research has already developed speech-to-text capabilities to translate audio in real time, and the Xbox division is looking at using this.

“What we’ve started to experiment with is ‘Hey, if we’re real-time translating speech to text, and we’ve got these text filtering capabilities, what can we do in terms of blocking possible communications in a voice setting?’” explains McCarthy. “It’s early days there, and there are a myriad of other AI and technology that we’re looking to stack around the voice problem, things like emotion detection and context detection that we can apply there. I think we’re learning overall… we’re taking our time with this to do it right.”

That’s obviously hugely complex, both in terms of the technology to achieve it and also the privacy aspects involved, but Microsoft’s goal is to have something similar to the filtering that TV stations can achieve on live broadcasts.

“An ultimate goal could be similar to what you’d expect on broadcast TV where people are having a conversation, and in real time, we’re able to detect a bad phase and beep it out for users who don’t want to see that,” explains Rob Smith, a program manager on the Xbox Live engineering team. “It’s a great goal, but we’re going to have to take steps towards that.” Microsoft will also have to consider any potential latency around analyzing voice samples in real time, as most party chat is used to coordinate in-game moves.

It sounds like before any automated audio filtering, Microsoft is looking at analyzing party chat speech to detect toxicity. “In the meantime, we could do things like analyzing a person’s speech and figuring out, overall, what’s their level of toxicity they’re using in this session? And maybe doing things like automatically muting them,” reveals Smith.

Microsoft is running a variety of tests internally to figure out what will work best and to consult its Xbox Live community on implementing its party chat plans. It’s an ambitious plan, but Microsoft will have to be very careful in how it analyzes this audio as the privacy implications are huge. “We have to respect privacy requirements at the end of the day for our users, so we’ll step into it in a thoughtful manner, and transparency will be our guiding principle to have us do the right thing for our gamers,” says McCarthy.

Microsoft could also find itself in a free speech debate with these filters, even though the Supreme Court affirmed earlier this year that First Amendment rights don’t apply to private platforms like Xbox Live. Microsoft is being clear about its intentions here and what it expects from a community it ultimately controls.

“Xbox Live is not a free speech platform. It is a curated community where we want you to have some degrees of personal freedom in that, which is why we’re doing four different settings to start here,” explains McCarthy. “But we don’t want to be ambiguous about what we stand for. This has to be a place where everyone has fun, and everything we do feature-wise and moderation practice-wise is going to vector across that set of values.”